- INSTALL APACHE SPARK ON WINDOWS HOW TO

- INSTALL APACHE SPARK ON WINDOWS INSTALL

- INSTALL APACHE SPARK ON WINDOWS DOWNLOAD

- INSTALL APACHE SPARK ON WINDOWS WINDOWS

This directory will be accessed by the container, that’s what option -v is for. Replace ” D:\ sparkMounted” with your local working directory.

INSTALL APACHE SPARK ON WINDOWS WINDOWS

Installing and running Spark & connecting with JupyterĪfter downloading the image with docker pull, this is how you start it on Windows 10:ĭocker run -p 8888:8888 -p 4040:4040 -v D:\ sparkMounted:/home/jovyan/work -name spark jupyter/pyspark-notebook

You will be using this Spark and Python setup if you are part of my Big Data classes. I will not repeat this here but instead focus on understanding and testing various ways to access and use the stack, assuming that the reader has the basic Docker/Jupyter background.Īlso do check my consulting & training page. Max describes Jupyter and Docker in greater detail, and that page will be very useful and recommended if you have not used much Docker/Jupyter before. However if this is not sufficient, then the Docker image documentation h ere or else read this useful third party usage commentary by Max Melnick. The summary below is hopefully everything you need to get started with this image.

Having tried various preloaded Dockerhub images, I started liking this one: jupyter pyspark/notebook.

INSTALL APACHE SPARK ON WINDOWS INSTALL

My suggestion is for the quickest install is to get a Docker image with everything (Spark, Python, Jupyter) preinstalled. Obviously, will run Spark in a local standalone mode, so you will not be able to run Spark jobs in distributed environment.

INSTALL APACHE SPARK ON WINDOWS HOW TO

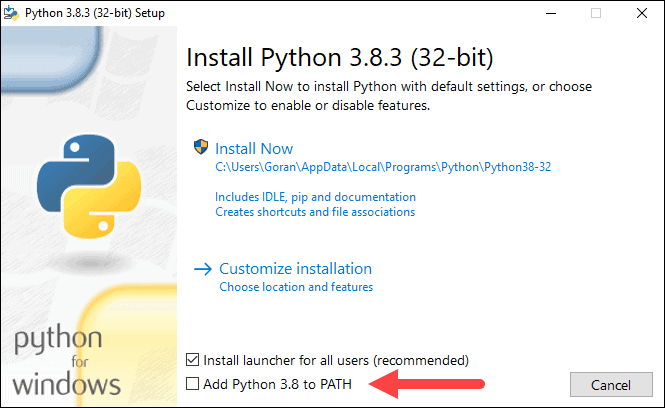

To experiment with Spark and Python (PySpark or Jupyter), you need to install both. Here is how to get such an environment on your laptop, and some possible troubleshooting you might need to get through. For those who want to learn Spark with Python (including students of these BigData classes), here’s an intro to the simplest possible setup. It is written in Scala, however you can also interface it from Python. Set SPARK_HOME in Environment variables.Apache Spark is the popular distributed computation environment. Steps are mentioned below.Ĭontrol Panel > System and Security > System > Advanced System Settings ( Require Admin privileges )Ĭontrol Panel > User Accounts > User Accounts > Change my Environment VariablesĪ. Set environment variables on your machine after step 1 and 2 are done.

INSTALL APACHE SPARK ON WINDOWS DOWNLOAD

Download Apache Spark distributionĪfter installing Java 8 in step 1, download Spark from and choose “Pre-built for Apache Hadoop 2.7 and later” as mentioned in below picture.Īfter downloading the spark, unpack the distribution in a directory. Open the command prompt and type java -version to check if Java is installed on your machine. Happy learning 🙂 STEPS: Install java 8 on your machine. By the end of this tutorial you’ll be able to use Spark with Scala on windows. In this post I will tell you how to install Apache Spark on windows machine. Understanding Apache Spark Map transformationĪpache Spark RDD’s flatMap transformationĪpache Spark RDD reduceByKey transformationĪpache Spark RDD groupByKey transformationĪpache Spark RDD mapPartitions and mapPartitionsWithIndex How to read a file using textFile and wholeTextFiles methods in Apache Spark How to create an empty RDD in Apache Spark How to create RDD in Apache Spark in different ways How To Create RDD Using Spark Context Parallelize Method How To Install Spark And Pyspark On Centos What is Broadcast Variable in Apache Spark with example

Repartition and Coalesce In Apache Spark with examples Working With Hive Metastore in Apache Spark Working with Parquet File Format in Spark Manipulating Dates in Dataframe using Spark API Understanding DataFrame abstraction in Apache Spark How to setup Spark 2.4 cluster on Google Cloud using Dataproc

0 kommentar(er)

0 kommentar(er)